The most advanced AI models possess hidden capabilities that extend far beyond what is immediately visible. In this article, we take a closer look at how you can uncover and leverage these embedded functions to solve complex, well-defined and useful tasks.

Generative AI models – and particularly the large language models ChatGPT, Gemini and Claude – have now impressed people for a couple of years with their ability to cross established domains and create something new. It feels like real, creative intelligence when we get complex accounting concepts described in a Duckburg language, or when image generation models create new furniture inspired by large soft cartoon teddy bears.

This lack of limitation when they reach the edge of one domain, and the models instead infer across to other domains, is understandably incredibly fascinating. This is what enables the models to always provide an answer, even when they are actually at the edge of their knowledge. They can guess at the unsaid, they can jump to other knowledge areas and create inference through purely language-statistical logical connections.

It's just not what creates the truly significant productive impact when the technology is put to work for us.

Breadth and depth

At a fundamental level, you can view the (very) large models based on two parameters: breadth and depth. Whilst the breadth of the models' training data and training has been what has been decisive in the vast majority of initial attempts and implementations, and is also what has created the aforementioned fascination, it is quite overlooked – or considered trivial – how deeply the models have also been trained within individual areas.

The challenge here has been that we have precisely been forced to consider the depth as trivial, because we have not been able to verify it – we don't know the training data, so we don't know what and how much of this or that the models have been trained on. In this way, our approach to them has been more in the direction of asking an unfathomable entity, where precisely the breadth and the unexpected connections became interesting.

Nevertheless, it is the depth in the models – the specialised function – that has brought about the greatest, directly identifiable optimisation: coding. Developers have embraced the large language models to such an extent that they can no longer imagine working without them. The models are namely trained on enormous amounts of code data, so they understand code exceptionally well.

Generative AI in support function

What does a language model understand?

The question therefore becomes: "What else do the models understand?"

The tech company Anthropic, which makes the language model Claude, one of the most direct competitors to ChatGPT, published a major study in early summer 2024 in which they had investigated what functions (referred to in the article as 'features', groupings, connections) existed in a language model's brain.

By running the model in a particular way millions of times, they were able to identify functions that concerned, for example, the Golden Gate Bridge. There was a lot of knowledge associated with the bridge – red, San Francisco, concrete and the like – and this meant that the model had a linguistic concept of what the Golden Gate Bridge was. By amplifying this function – that is, by using code to increase the likelihood of the model using the Golden Gate Bridge in its answers – they also experienced the model beginning to behave differently than before. For instance, it began to believe it was a large red bridge and not a language model. And there were countless such functions hidden in the model. The existence of these functions means that there is hidden expertise in the models.

And it is this expertise we need to uncover and build with.

For the models also have, for example, a function that concerns invoices. They have seen so many different invoices that they know what they look like, and also how an outlier invoice can be interpreted as an ordinary one – there are logics in the models that in a particular way can rationalise that this is a customer number, this is a contact person etc. Just as they can also see what is an email signature in an email about, for example, a change of address, so that two addresses in the same email are not confused. Because they also have a function that concerns email structures and formats.

By uncovering these functions, predictability is also achieved. Try, for example, asking ChatGPT to complete this sentence "To be or not to be; that is …", and you will always get "the question". But if you instead ask it to complete this sentence, "The CFO went to Mars, because …", you get chaotic answers every time. Simply because there it uses its breadth to guess at something it doesn't actually have sufficient depth for. There is a Hamlet function hidden in a language model, but not an astronaut-CFO.

Pinpointing functions is a game-changer

When we prompt engineer – that is, design questions and tasks for an AI model – we always try to frame the task and context. That's the whole point. And we do this because we don't want it to answer in breadth, but down into a deeper context. Developers who use the models understand this intuitively, but it must also be understood on an analytical conceptual level.

The tasks we set a language model to work on must therefore be two things: definable, so that a function can be identified or ruled out, and fundamentally generally known, so that we can expect that the models may have a function that can serve our purpose. This also means that we must briefly look beyond the chatbot that can answer everything and instead seek to uncover sharp functions that can solve known problems. Instead of AI agents, which are being talked about a great deal in the AI market right now, the benefit for most companies lies in defining and developing AI roles. The difference is simply that an agent is expected to be able to do everything you ask it to do and to be autonomous, whilst a role only comes on stage at the right time and with precise lines.

By pinpointing the role, we get predictability back and task solutions we can measure. We also get the opportunity to use the models for what we have never before been able to solve so easily with technology: to structure the unstructured. Through roles that build on identified functions, and which can contextualise and logically interpret different inputs, a language model can create order in data that is otherwise arbitrary. And that is the real game-changer when it comes to the new wave of AI, because it expands the entire digital playing field.

Podcast: The Only Contant

The short message: By focusing the large AI models, you get concrete benefits

After now spending a few years testing and spreading the message about AI throughout organisations, companies face the challenge of how to create real value with generative AI. The truth is that whilst the models first impressed by resembling a C3PO that could answer everything, the benefit lies in finding the models' inner functions and putting them in a straitjacket around these functions. The models know an enormous amount, but what's interesting is finding out how much they know about one topic and one task, and not how much they can spread out over irrelevant areas.

Treat them like an employee who is to be hired: The person's knowledge of the specific tasks is always more important than other general knowledge. Identification of precise (often unstructured) tasks and testing of the models' sharpness on these is therefore absolutely crucial for reaping value from the large language models.

Competencies all the way round

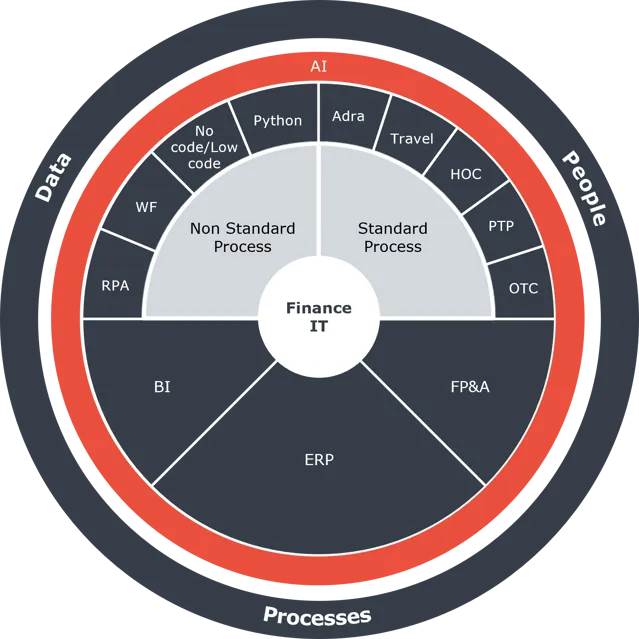

Finance IT Services' digitalisation circle shows the areas of competency we can bring into play when the financial processes in your company are to be digitalised with a holistic starting point.

When we help to digitalise and automate a finance function, we make a virtue of composing the applications and tools that meet your specific needs. In a way that ensures the solution supports data, processes and the people who work hard behind the scenes.

You can click on the different areas in the figure below if you want to read more about the many competencies that appear in Finance IT Services' digitalisation circle.

Would you like to understand more about both the depth and breadth of generative AI?

Then give our AI Lead, Lasse Rindom, a call. His engaging presentations are sure to leave you with plenty of AI food for thought. And if you're seeking inspiration on how to use and implement generative AI so it aligns with your company's purpose, vision and strategy? Then he's also the man you're looking for.

en

en

da

da